43 soft labels machine learning

ARIMA for Classification with Soft Labels | by Marco Cerliani | Towards ... In this post, we introduced a technique to carry out classification tasks with soft labels and regression models. Firstly, we applied it with tabular data, and then we used it to model time-series with ARIMA. Generally, it is applicable in every context and every scenario, providing also probability scores. Machine learning - Wikipedia Machine learning (ML) ... Some of the training examples are missing training labels, yet many machine-learning researchers have found that unlabeled data, when used in conjunction with a small amount of labeled data, can produce a considerable improvement in learning accuracy. In weakly supervised learning, the training labels are noisy, limited, or imprecise; however, these …

Creating targets for machine learning labels - Python Programming Again, being careful about infinite numbers, and then filling any other missing data, and, now, finally, we are ready to create our features and labels: X = df_vals.values y = df[' {}_target'.format(ticker)].values return X,y,df The capital X contains our featuresets (daily % changes for every company in the S&P 500).

Soft labels machine learning

weijiaheng/Advances-in-Label-Noise-Learning - GitHub 15.06.2022 · A Novel Perspective for Positive-Unlabeled Learning via Noisy Labels. Ensemble Learning with Manifold-Based Data Splitting for Noisy Label Correction. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels. On the Robustness of Monte Carlo Dropout Trained with Noisy Labels. Machine Learning Algorithm - an overview | ScienceDirect Topics The second major class of machine learning algorithms is called unsupervised learning, in which input data don't have any labels to go with the data. Such problems are solved by finding some useful structure in input data, in a procedure called clustering. So once we can discover this structure in the form of clusters, groups or other exciting subsets, this composition will be used … python - scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category.

Soft labels machine learning. Data Labeling Software: Best Tools for Data Labeling - Neptune In machine learning and AI development, the aspects of data labeling are essential. You need a structured set of training data that an ML system can learn from. It takes a lot of effort to create accurately labeled datasets. Data labeling tools come very much in handy because they can automate the labeling process, which […] Pros and Cons of Supervised Machine Learning - Pythonista Planet Another typical task of supervised machine learning is to predict a numerical target value from some given data and labels. I hope you’ve understood the advantages of supervised machine learning. Now, let us take a look at the disadvantages. There are plenty of cons. Some of them are given below. Cons of Supervised Machine Learning machine learning - What are soft classes? - Cross Validated As a result, the weights will probably bounce back and forth, because the two examples push them in different directions. That's when soft classes can be helpful. They allow you to train the network with the label like: x -> [0.5, 0, 0.5, 0, 0] Note that this is a valid probability distribution and it matches the cross-entropy loss. Label Smoothing - Lei Mao's Log Book In machine learning or deep learning, we usually use a lot of regularization techniques, such as L1, L2, dropout, etc., to prevent our model from overfitting. ... Label smoothing is a regularization technique for classification problems to prevent the model from predicting the labels too confidently during training and generalizing poorly.

Is it okay to use cross entropy loss function with soft labels? In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies. In fact, it seems even more natural in this case. Research - Apple Machine Learning Research Explore advancements in state of the art machine learning research in speech and natural language, privacy, computer vision, health, and more. Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability Learning Soft Labels via Meta Learning - Apple Machine Learning Research Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. Also, training with fixed labels in the presence of noisy annotations leads to worse generalization.

The Ultimate Guide to Data Labeling for Machine Learning - CloudFactory In machine learning, if you have labeled data, that means your data is marked up, or annotated, to show the target, which is the answer you want your machine learning model to predict. In general, data labeling can refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing. MNIST For Machine Learning Beginners With Softmax Regression This is a tutorial for beginners interested in learning about MNIST and Softmax regression using machine learning (ML) and TensorFlow. When we start learning programming, the first thing we learned to do was to print "Hello World.". It's like Hello World, the entry point to programming, and MNIST, the starting point for machine learning. What is machine learning? | Microsoft Azure Machine learning (ML) is the process of using mathematical models of data to help a computer learn without direct instruction. It's considered a subset of artificial intelligence (AI). Machine learning uses algorithms to identify patterns within data, and those patterns are then used to create a data model that can make predictions. With ... Unsupervised Machine Learning: Examples and Use Cases Unsupervised machine learning is the process of inferring underlying hidden patterns from historical data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed. Let’s get back to our example of a child’s experiential learning. Picture a toddler. The child knows …

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Inverse Text Normalization as a Labeling Problem - Apple Machine ... In most speech recognition systems, a core speech recognizer produces a spoken-form token sequence which is converted to written form through a process called inverse text normalization (ITN). ITN includes formatting entities like numbers, dates, times, and addresses. Table 1 shows examples of spoken-form input and written-form output.

Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

Set up image labeling project - Azure Machine Learning An Azure Machine Learning dataset with labels. Access exported Azure Machine Learning datasets in the Datasets section of Machine Learning. The dataset details page also provides sample code to access your labels from Python. Once you have exported your labeled data to an Azure Machine Learning dataset, you can use AutoML to build computer ...

[D] Instance weighting with soft labels. : MachineLearning - reddit Suppose you are given training instances with soft labels. I.e., your training instances are of the form (x,y,p), where x ins the input, y is the class and p is the probability that x is of class y. Some classifiers allow you to specify an instance weight for each example in the training set.

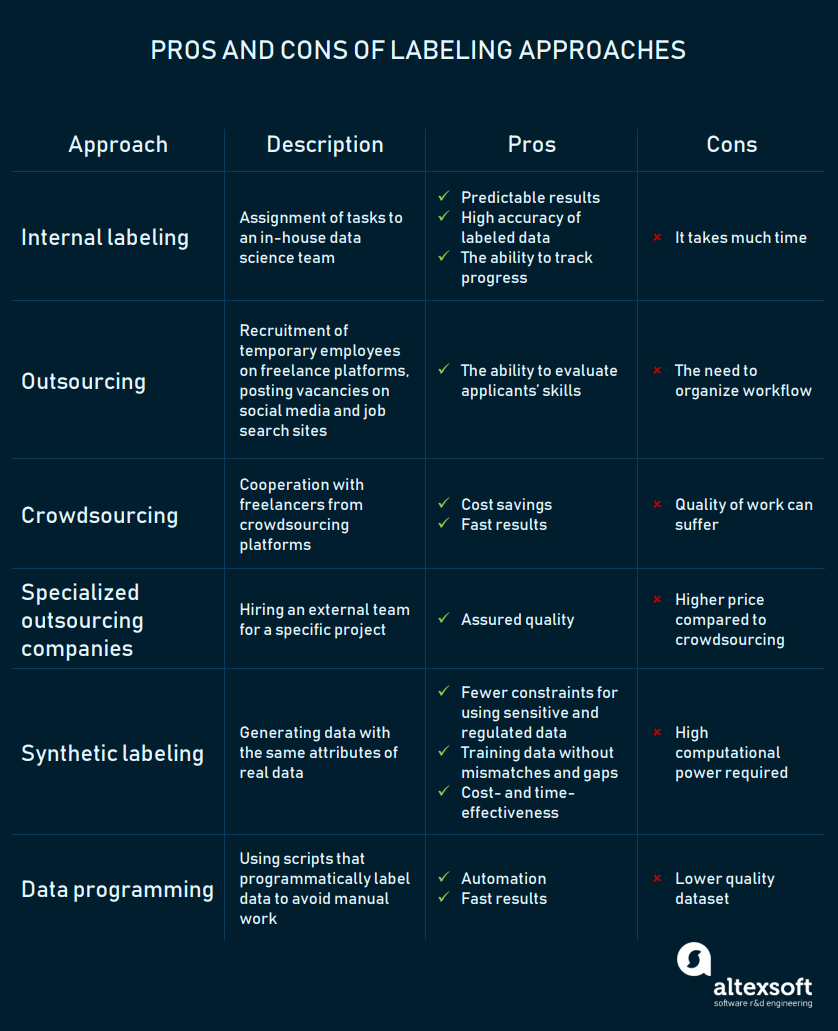

How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning.

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Clustering in Machine Learning: 3 Types of Clustering Explained 30.11.2020 · 6 min read Introduction Machine Learning is one of the hottest technologies in 2020, as the data is increasing day by day the need of Machine Learning is also increasing exponentially. Machine Learning is a very vast topic that has different algorithms and use cases in each domain and Industry. One of which is Unsupervised Learning in which […]

Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

Semi-Supervised Learning With Label Propagation - Machine Learning Mastery Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data.

What is Label Smoothing?. A technique to make your model less… | by ... Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y

PDF Efficient Learning with Soft Label Information and Multiple Annotators We propose multiple meth- ods, based on regression, max-margin and ranking methodologies, that use the soft label information in order to learn better classifiers with smaller training data and hence smaller annotation effort. We also study our soft-label approach when examples to be labeled next are selected online using active learning.

Scaling techniques in Machine Learning - GeeksforGeeks 04.12.2021 · Note: Generally the most preferred shampoo is placed on the top while the least preferred at the bottom. Non-comparative scales: In non-comparative scales, each object of the stimulus set is scaled independently of the others. The resulting data are …

Key Concepts in Machine Learning - Coursera 13.10.2017 · So the first step in solving a problem with machine learning is you have to figure out how to represent the learning problem in terms of something the computer can understand. You need to be able to take your data or even formulate the description of your object that you're interested in recognizing, for example, in a way that you can use input to an algorithm. And you …

Learn The Colors From The Fun Ice Cream Machine - Kids Learn Colors | Ga... | Learning colors ...

A Gentle Introduction to Cross-Entropy for Machine Learning Cross-entropy is commonly used in machine learning as a loss function. Cross-entropy is a measure from the field of information theory, building upon entropy and generally calculating the difference between two probability distributions. It is closely related to but is different from KL divergence that calculates the relative entropy between two probability distributions, whereas cross-entropy

Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Candidate sampling means that Softmax calculates a probability for all the positive labels but only for a random sample of negative labels. For example, if we are interested in determining whether...

Labelling Images - 15 Best Annotation Tools in 2022 - Folio3AI Blog For this purpose, the best machine learning as a service and image processing service is offered by Folio3 and is highly recommended by many. ... Its algorithm-based automation features include a pre-labeling feature that pre-labels image data using an existing machine learning (ML) model. Label Studio also has a vibrant user base and an active ...

![Reflections Of The Void: [Links of the Day] 05/12/19 : Tensor Processing Units for Machine ...](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj6tvTzZEx8yFZfDXHbjgk0DNmg55cCxtDkDhAA-Wv3KPdf7Fr3UcBtU9flR0QtiLmedTNUwQsw9LhOGFQS9eSsGyfMvBbXosqenByvMbFmtI8SoBFH1YMwA36RCcs6xnwoeF_BQfvsJpZd/s1600/giphy+%252815%2529.gif)

Post a Comment for "43 soft labels machine learning"